In this tutorial we are going to create Augmented Reality example by using ARKit released by apple in Swift 4.

We need to integrate iOS device camera and motion features to produce augmented reality experiences in the app. For that Apple introduced SceneKit, this will do all for us.

So we are going to start ARKit using SceneKit.

We are going to add a cube to real word.

First create a new project -> open Xcode -> File -> New -> Project -> Single View App, then tap next button. Type product name as 'ARKitAddingCube' then tap next and select the folder to save project.

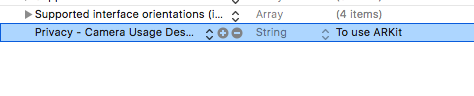

We are going to use camera, So add 'NSCameraUsageDescription' to Info.plist.

Then open ViewController.swift add the following line next to 'import UIKit'.

For getting access to camera we are going to add ARSCNView() as subview. ARSCNView will provide camera to us.

Add the following property before viewDidLoad() method.

Add created property as subview. Write following code inside viewDidLoad() method.

Build and Run you nothing is there except white screen. No worries stop and follow next steps.

As we all know that camera is based on sessions. So for this also we need to create and maintain sessions. For ARKit we are going to create ARSession.

Every session is based on configuration. In ARKIt there are ARSessionConfiguration.

The good thing about using SceneKit is no need to create session by default ARSCNView() having a session. But the for running session we need ARSessionConfiguration. Then configuration as follow.

Then run the session with above configuration.

Now its time to add a cube to real world space.

Before that, add a Button to view. Add two methods next to viewDidLoad() method.

Then add 'addButton()' to the end of viewDidLoad() method.

Build and Run we will see Button at the bottom.

In this whole project we don't use StoryBoards. If you want to know why follow this link.

http://iosrevisited.blogspot.com/2017/08/11-reasons-why-not-to-use-storyboards.html

Now all set for adding Cube.

Providing 3D Virtual Content with SceneKit.

For adding any object to scene we need to create SCNNode and add that as a child node to SceneView.

let's create SCNNode as follow. Write the following code in @IBAction method.

CubeNode created with a 0.1 meters of height, width and length.

Now where to position this cube ? In this for visualize we are going to place 0.2 metres in front of device. Give position to cubeNode as follow.

Then add cubeNode as child node to Scenview.

Great! Build and Run, Tap on Add a cube button, we see white cube. Move device around if can not see Cube.

On tapping Add a Cube button multiple times it's adding cube again and again at same position so we can't see multiple cubes.

Next step, adding multiple cubes to real world space.

For this nothing much to do. Simply we need to change cubes position according to cameras position.

To get camera relative postion add following method.

By using above method we get camera relative position. So we need give cube node postion as camera position.

Replace code inside 'addCubeButtonTapped' method with the following code.

In the above code we are assigning camera's position to cube node.

Done. Build and Run we will see camera with 'Add a Cube' button.

Now tap button and wait until cube appears. If not step back and see. Move your device as you like and observe the added cube, it won't change it's position. That's what Augmented Reality means.

Your final output for ARKit will be like the following Video.

Download sample project with examples :

We need to integrate iOS device camera and motion features to produce augmented reality experiences in the app. For that Apple introduced SceneKit, this will do all for us.

So we are going to start ARKit using SceneKit.

Requirements :

Xcode 9 , Device with an A9 or later processor running with iOS11.

We are going to add a cube to real word.

Getting Started :

First create a new project -> open Xcode -> File -> New -> Project -> Single View App, then tap next button. Type product name as 'ARKitAddingCube' then tap next and select the folder to save project.

We are going to use camera, So add 'NSCameraUsageDescription' to Info.plist.

Then open ViewController.swift add the following line next to 'import UIKit'.

import ARKit

For getting access to camera we are going to add ARSCNView() as subview. ARSCNView will provide camera to us.

Add the following property before viewDidLoad() method.

var sceneView = ARSCNView()

Add created property as subview. Write following code inside viewDidLoad() method.

sceneView.frame = view.frame view.addSubview(sceneView)

Build and Run you nothing is there except white screen. No worries stop and follow next steps.

As we all know that camera is based on sessions. So for this also we need to create and maintain sessions. For ARKit we are going to create ARSession.

Every session is based on configuration. In ARKIt there are ARSessionConfiguration.

The good thing about using SceneKit is no need to create session by default ARSCNView() having a session. But the for running session we need ARSessionConfiguration. Then configuration as follow.

let configuration = ARWorldTrackingSessionConfiguration()

Then run the session with above configuration.

sceneView.session.run(configuration)Build and Run, we see camera's permission . Allow camera and we see camera running on your device.

Now its time to add a cube to real world space.

Before that, add a Button to view. Add two methods next to viewDidLoad() method.

@IBAction func addCubeButtonTapped(sender: UIButton) {

print("Cube Button Tapped")

}

func addButton() {

let button = UIButton()

view.addSubview(button)

button.translatesAutoresizingMaskIntoConstraints = false

button.setTitle("Add a CUBE", for: .normal)

button.setTitleColor(UIColor.red, for: .normal)

button.backgroundColor = UIColor.white.withAlphaComponent(0.4)

button.addTarget(self, action: #selector(addCubeButtonTapped(sender:)) , for: .touchUpInside)

// Contraints

button.bottomAnchor.constraint(equalTo: view.bottomAnchor, constant: -8.0).isActive = true

button.centerXAnchor.constraint(equalTo: view.centerXAnchor, constant: 0.0).isActive = true

button.heightAnchor.constraint(equalToConstant: 50)

}

Then add 'addButton()' to the end of viewDidLoad() method.

Build and Run we will see Button at the bottom.

In this whole project we don't use StoryBoards. If you want to know why follow this link.

http://iosrevisited.blogspot.com/2017/08/11-reasons-why-not-to-use-storyboards.html

Now all set for adding Cube.

Providing 3D Virtual Content with SceneKit.

For adding any object to scene we need to create SCNNode and add that as a child node to SceneView.

let's create SCNNode as follow. Write the following code in @IBAction method.

let cubeNode = SCNNode(geometry: SCNBox(width: 0.1, height: 0.1, length: 0.1, chamferRadius: 0)) // SceneKit/AR coordinates are in meters

CubeNode created with a 0.1 meters of height, width and length.

Now where to position this cube ? In this for visualize we are going to place 0.2 metres in front of device. Give position to cubeNode as follow.

cubeNode.position = SCNVector3(0, 0, -0.2)

Then add cubeNode as child node to Scenview.

self.sceneView.scene.rootNode.addChildNode(cubeNode)

Great! Build and Run, Tap on Add a cube button, we see white cube. Move device around if can not see Cube.

On tapping Add a Cube button multiple times it's adding cube again and again at same position so we can't see multiple cubes.

Next step, adding multiple cubes to real world space.

For this nothing much to do. Simply we need to change cubes position according to cameras position.

To get camera relative postion add following method.

func getMyCameraCoordinates(sceneView: ARSCNView) -> MDLTransform {

let cameraTransform = sceneView.session.currentFrame?.camera.transform

let cameraCoordinates = MDLTransform(matrix: cameraTransform!)

return cameraCoordinates

}

By using above method we get camera relative position. So we need give cube node postion as camera position.

Replace code inside 'addCubeButtonTapped' method with the following code.

DispatchQueue.main.async {

print("cube button tapped")

let cubeNode = SCNNode(geometry: SCNBox(width: 0.1, height: 0.1, length: 0.1, chamferRadius: 0))

let cc = self.getMyCameraCoordinates(sceneView: self.sceneView)

cubeNode.position = SCNVector3(cc.translation.x, cc.translation.y, cc.translation.z)

self.sceneView.scene.rootNode.addChildNode(cubeNode)

}

In the above code we are assigning camera's position to cube node.

Done. Build and Run we will see camera with 'Add a Cube' button.

Now tap button and wait until cube appears. If not step back and see. Move your device as you like and observe the added cube, it won't change it's position. That's what Augmented Reality means.

Your final output for ARKit will be like the following Video.

Download sample project with examples :

No comments:

Post a Comment